Learn the fastest way you can use to deindex pages from Google search.

Here are 4 easy ways you can use to deindex pages from Google search.

Most businesses worry about getting all their pages indexed.

But not all things are created equally. It turns out indexing some of your pages can hurt your rankings and authority. The worst thing is that you usually don’t even know these pages have been indexed.

Google is good at indexing, sometimes too good, and indexes pages that shouldn’t be indexed.

Today you’ll learn how to deindex a page from Google.

You’ll discover how and what pages you should deindex to increase your site’s authority and rank higher.

First thing first, why do you need to deindex pages from Google? Let’s find out the answer below.

Why Deindex Pages From Google?

There are many reasons why people choose to deindex certain pages from Google. The common reason is due to duplicate content. But in some cases, it is because the webpages are not meant to be used for indexing.

For example, a Thank You page. It is a page that you show to users after they completed certain actions on your website. You certainly want to deindex this page because you don’t want people to find them through Google search without completing the action you want them to.

Indexing them may lead to severe problems like:

- Hurting your authority (and rankings)

- Slowing down the indexing of important pages

To prevent that, you must deindex these pages from search results.

Noindex vs. Nofollow

Since some may be confused with the terms, let’s look at the differences.

When using a noindex tag, we tell search engines that while they can crawl the page to understand its content, they cannot index the page to appear in search engine results.

On the contrary, the nofollow tag tells search engines not to follow the links on that page. This disavows the links on that page and informs the search engine not to pass any authority onto the pages that are linked within your content.

You may read more about how to use the nofollow link in our previous article.

How to Check If URL Is Deindexed?

You might be wondering, “How do I know whether a page is indexed?”

There are two ways to figure this out.

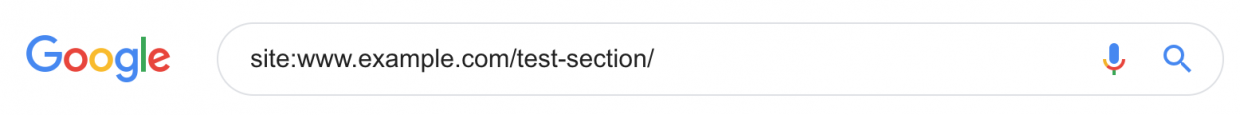

1. Check Manually In Google

The easiest way to find out whether you have deindexed the page or not is directly in Google search results.

Go to Google and type in site:yoursite.com, as you can see below.

You’ll get the exact count of indexed pages right below the search bar and specific indexed pages listed in the search results.

But what if you want to check for the specific page?

You can do the same, but instead of typing “site:yoursite.com,” you type in the specific URL to the page you want to check.

For example, the page above should never be indexed.

It’ll help you find whether a specific page is indexed or not so you can remove it later on. If you have a deindexed page, it’ll show you no results.

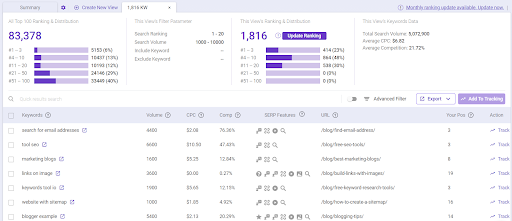

Extra tips: Use BiQ’s Rank Intelligence to check all ranking keywords and pages.

This rank checker tool will let you know all the indexed pages and ranking on the search engine. Furthermore, it’s easy to use.

Enter your website URL into Rank Intelligence. Then, select the country where the audience is based.

Once you’ve entered the domain name and the country your audience is based, you’ll get valuable insights such as your ranking keywords and pages.

These insights let you know the search terms that searchers are searching for to reach your website.

Check out how you can use the feature above to gain a competitive advantage over your competitors.

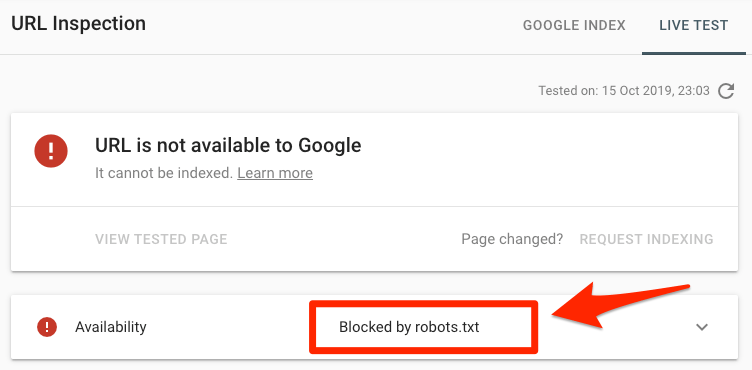

2. Check In Google Search Console

Google Search Console is especially useful for in-depth insights.

You can quickly look at the list of all indexed URLs or type in the specific URL in the search box in the upper menu to find out more.

If you have an indexed page, you’ll get the “URL is on Google” message.

If you have deindexed the page, you’ll get the “URL is not on Google” message.

But, there’s also one very helpful feature.

You can also look at the list of all excluded pages, which can help you see which pages haven’t been indexed.

It’ll also help you see the reason why. This can speed up the process because you don’t have to check what pages are deindexed manually.

How To Deindex Pages From Google?

There are multiple ways to remove URL from Google. But following are the easiest and widely used methods to deindex pages from Google.

- Delete the content

- Mark as noindex

- Fix duplicate content

- Use URL removal tool.

At the same time, we’ll also be looking at the exact situation of using each method. It’ll help you to deindex the page correctly.

1. Delete The Content

There’s nothing easier than removing content from the Internet.

You simply find out a page that is indexed but meaningless and remove it from your site. It’s the best way to deal with indexed pages you didn’t know exist.

An example of that can be our automatically generated sample page.

It’s a completely irrelevant, low-quality webpage. There’s no point in it ranking in Google, nor existing on our site at all.

There’s no benefit in keeping it, and it will only hurt our authority and slow down the indexing of other webpages.

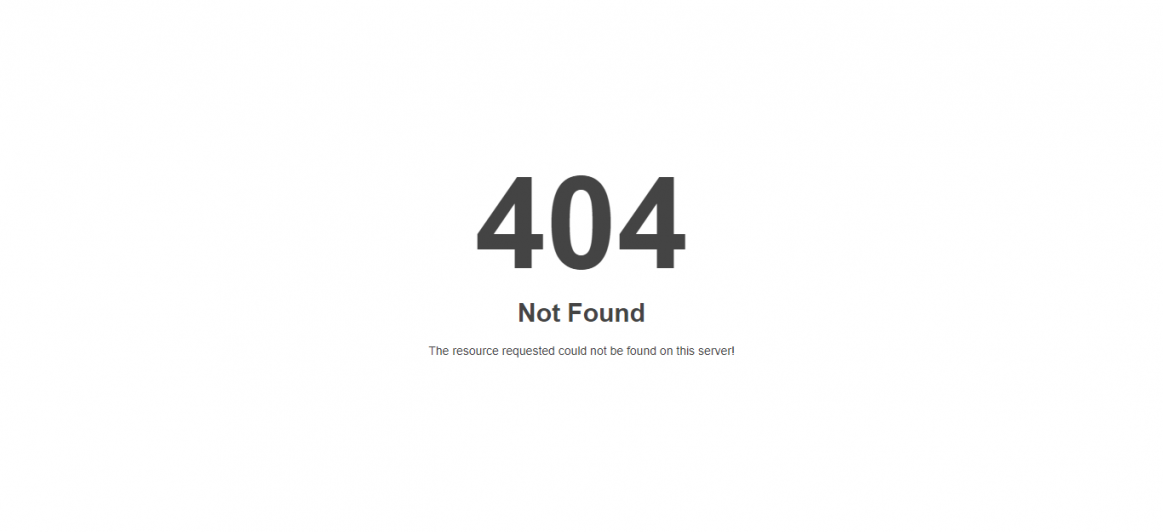

When you remove the page, it’ll show the 404 Not Found page.

This page will be removed from the search results shortly after the page is recrawled by the search engine.

Boom, and it’s gone.

How to Remove a WordPress Page

Removing a page when running WordPress is super easy.

You just need to open your WordPress dashboard and navigate to the All Pages section.

You can then select the specific page and remove it.

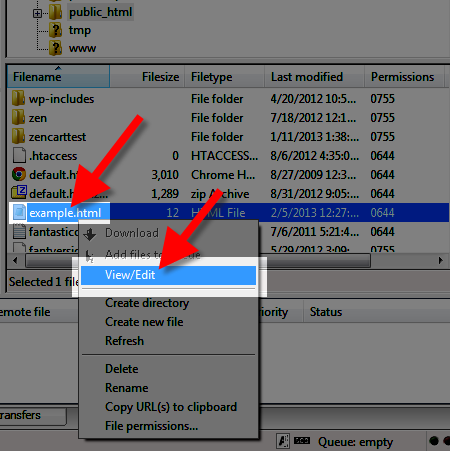

How To Remove Non-WordPress Page

It’s a bit more complicated than with WordPress.

You’ll need to connect to your website hosting through the FTP and remove the webpage file from there.

You can do that by downloading any FTP programs such as FileZilla.

Go to FileZilla, open the Site Manager, fill in the credentials like host, protocol, password, and connect to your website’s files.

You can find the credentials to your FTP in your hosting dashboard.

You’ll then need to open the public_html folder and search for the specific webpage you want to remove.

Now you’ll just need to press the Delete button, and the page is gone.

2. Mark As Noindex

You might want to have webpages that you don’t want to remove, but you don’t want them to be indexed either. That’s usually the case with webpages such as the Thank You page.

All you want to do is just tell Google, “Hey, don’t index this page.”

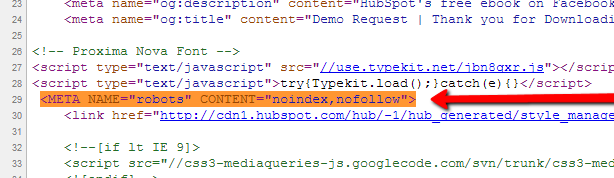

We’ll be using robots noindex meta tag for that.

How to use the noindex meta tag?

Noindex tag tells search engines that it shouldn’t index the page.

You’ll need to open the HTML file of the webpage you want to deindex and navigate to the <head> section.

There you’ll need to place a snippet of code you see below.

It’ll prohibit search engine bots from indexing your page.

The search engine bots will remove the page from search results as soon as they re-crawl that page.

3. Duplicate Content

Sometimes you can have duplicate content.

It’s the content that appears on the internet in more than one place – you can access the same content with multiple URLs.

It usually happens automatically with you knowing about it. But, it can have a negative impact on your rankings.

It may confuse the search engine, and it may not be able to rank your webpage properly.

There’s a big chance you have duplicate content on your website. In fact, 50% of all websites have duplicates.

We’ll look at how to identify the duplicate content and fix it.

It’ll allow you to deindex a page that is just a duplicate, boost your rankings and increase traffic.

How to Identify Duplicate Content?

You can find duplicate content with the Siteliner tool.

Type in your URL, hit Go and get the duplicate content report.

Click on Duplicate Content to see the individual URLs.

Click on the URL, and then hit View page summary.

You’ll get the table with all duplicates.

How to Fix Duplicate Content

The easiest way to fix duplicate content is to use “rel=canonical.”

You must first pick which page you want to show in SERP. This will be your main page. You’ll then need to add rel=canonical to all duplicate pages.

![Canonical Tags [2021 SEO] - Moz](https://biq.cloud/wp-content/uploads/2021/06/canonical-view-source.png)

The canonical tag will basically tell search engines that the page is just a duplicate of the main page.

It’ll fix the problem with duplicate content, deindex page that is just a duplicate and rank your pages properly.

Learn more about duplicate content and how to fix it in my post.

4. URL Removal Tool

This way of deindexing should be only the last resort.

It’s a temporary solution that will hide your content for a certain period of time. You should use it only in the most extreme cases, such as data leaks, security issues, and similar stuff.

It usually hides the page very quickly – within a day.

How To Use Google Removal Tool

Log in to Google Search Console and click on Removals.

Select the Temporary Removals tab in the upper menu.

Click on New Request to submit the URL you want to temporarily hide from the search results.

The pop-up window will appear.

Select the Temporarily Remove URL and fill the URL you want to deindex from the search results temporarily.

Check Remove this URL only and hit Next.

You’ll then need to press Submit Request, and you’re done.

You’ll see the request, together with the list of all submitted URLs in the Submitted requests list. You can check its status to know whether Google has deindexed it yet.

Remember, this is only a temporary solution.

You’ll need to change or remove the content of the URL eventually because, after a certain period of time, it will be visible again.

This period varies across the search engines.

For Google, it’s 6 months, while for Bing, it’s only 3 months.

How To Deindex Pages: Best Practices

We’ve talked about strategies to deindex pages from SERP.

But, there are a few tips & tricks that can help you speed up the whole process. You’ll also discover mistakes you must avoid at any cost.

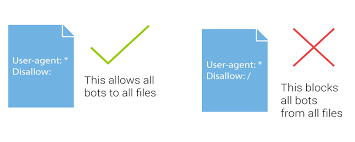

1. Don’t Confuse Noindex with Disallow

There’s a big misconception on the Internet.

Some SEOs will tell you that you can easily hide your webpages from search results by disallowing them in the Robots.txt file.

Robots.txt is a file that gives instructions to search engine bots about how they should crawl your webpage.

If you disallow crawling of your page, it won’t get indexed, right?

Well, not necessarily…

Disallowing crawling of the page prohibits bots from going over the code of the webpage – they skip it. That means that they won’t be able to go over and find out you added a noindex tag.

This can actually do the opposite and stop you from deindexing.

Make sure you don’t have collisions between the Robots.txt file and the noindex tags.

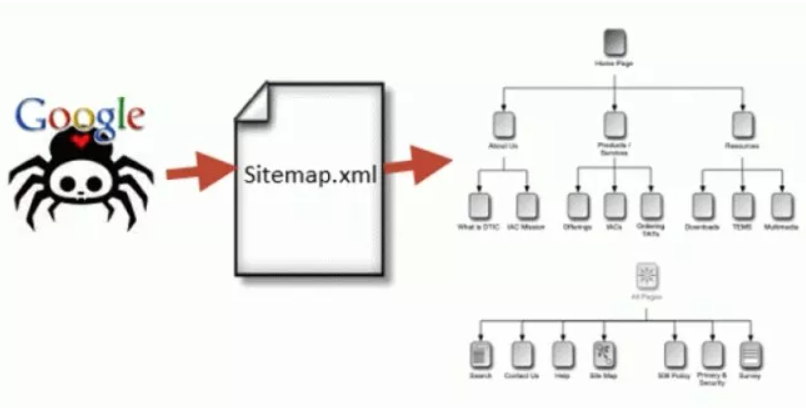

2. Collisions Between Sitemap.xml & Robots.txt

Sitemap.xml is a hierarchical list of all webpages your website has.

The search engine bots will crawl through the sitemap, find your webpages and index them.

Robots.txt can do a similar thing –allow or disallow crawling.

But, what if there’s a collision between the two? You tell bots to crawl pages in the Sitemap.xml but disallow crawling in Robots.txt.

There can be an error that can prohibit the page from indexing and deindexing – the opposite of what you want.

Ensure there are no collisions between Sitemap.xml and Robots.txt.

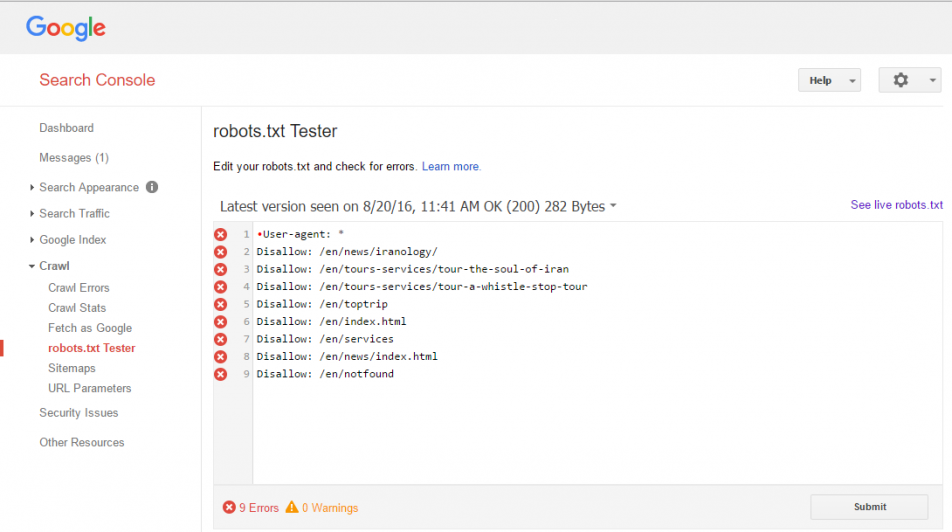

3. Test Robots.txt File & Sitemap.xml

Everybody makes mistakes.

But, what you may not realize is that just one small mistake in Robots.txt can completely kill your traffic.

The same thing can happen with the Sitemap.xml.

That’s why you should check if both of them work correctly and are without any errors (or you risk hiding your whole website from SERP).

There are two easy tools you can use for that.

Google Robots.txt Tester

It’s a very simple tool.

You just need to copy-paste your Robots.txt content and hit Submit.

It’ll test your Robots.txt and show you errors.

Google Search Console

The process is very similar to Robots.txt.

You just need to upload your sitemap.xml file on your site and copy-paste the link to the Google Search Console.

You’ll press Submit, and it’ll submit your sitemap.xml to Google.

If everything worked out as it should, you’d see a green text saying Success under the status.

You can click on the sitemap in the list to show more in-depth stats and check for any errors.

If there are any errors, you should fix them and resubmit the sitemap.

However, both of these tools aren’t necessary nowadays.

This is because you can easily generate sitemap.xml and Robots.txt automatically, without any errors.

Learn more about that in my comprehensive guide on XML sitemaps.

Conclusion

Deindexing pages can be beneficial.

Especially if these pages are low-quality or irrelevant such as the “Thank you” page or “Order Completed” page. These pages shouldn’t rank in search results – you must deindex them.

You’ve learned about multiple ways to deindex page in Google.

Which one of them will you try next?

Or did I forget to mention something important?

Either way, let me know in the comment section right now.